Putting the Squeeze on Pixels

Video, codecs and containers.

Text:/ Stephen Dawson

When digital video arrived in 1986 it had one major problem. It was monstrously large. Way bigger than the pipelines and carriers then available – indeed, that are available today.

How was video to be reduced so much in size that a whole movie could be delivered on a 12cm silver disc? How could that video be streamed over the still-limited bandwidth available to businesses and consumers? How could high definition – even UHD – video be captured on devices which use flash memory for storage.

The answer? Principally, increasingly-effective systems of data compression, along with logical containers to package the compressed video and its associated audio.

THE SIZE OF THE PROBLEM

Let’s get a sense of the size of the problem. We’ll keep things easy by starting with the familiar PAL DVD standards. These present 50 fields per second, and each field is 720 pixels wide by 288 pixels tall (DVDs have their video interlaced, so only half the vertical information is contained in each field). That makes for over 207,000 pixels per field, or more than 10.3 megapixels per second.

DVDs replicated the long-standing analogue TV delivery standards in which colour was provided with only one quarter of the resolution of the monochrome component of the video. Each pixel requires eight bits of data for the luminance (monochrome component) and four more bits for the two colour difference components. That’s 12 bits per pixel, or a byte-and-a-half. Do the multiplication and you’ll find that our SD video needs a throughput of more than 124Mbps.

The runtime of Gladiator on a Region 4 DVD is 149 minutes. At that rate it would require the space of more than 16 dual-layer, single-sided DVDs. Instead, the actual video bitrate of Gladiator on DVD is 5.98Mbps (Collector’s Edition) or 6.45Mbps (Superbit version). These represent very impressive compression ratios of just 4.8% and 5.2% of the original size.

Re-run the figures for Gladiator on Blu-ray and you get an uncompressed bitrate of nearly 600Mbps. The actual measured bitrate of this movie on the disc is 17.66Mbps, so the compression squeezes it to less than 3% of the original size.

Yet, visually, it still looks glorious. So how do they do it?

DATA COMPRESSION THE ANALOGUE WAY

Unfortunately there is simply no way yet discovered for video data to be highly compressed without losses. The first losses occurred long before digital video. Video cameras typically work in RGB. That is, they separately capture the red, green and the blue elements of each picture. The colour detection systems in our eyes, as it happens, also work in RGB. But colour analogue TV transmissions used a different standard: YUV. RGB was converted to YUV, encoded onto the transmission signal, received by your TV, then turned back to RGB to recreate the image in your TV’s tube.

Y is the luminance (monochrome) part of the signal, while U and V are colour (chrominance) components. RGB can be converted to YUV without significant loss, and likewise the other way around.

However, the human eye as it turns out, is far less capable of resolving colour detail than it is monochrome detail: the cells in our retinas for the latter are far more numerous. To take advantage of this, the U and V information was only transmitted for each group of four pixels, while the Y information is for every pixel (a process known as chroma sub-sampling). Were RGB or YUV with full-colour resolution transmitted, it would involve twice as much data.

This scheme has continued for digital distribution channels such as DVD, Blu-ray and digital TV. In the digital domain, the numbers are clearer. The Y luminance channel gets eight bits of resolution – which works out to 256 levels of grey scale, while the U and V chrominance channels also get 256 levels, but at one quarter of the resolution. This certainly saves space when distributing video.

Professional video cameras generally capture (if not always output) full resolution for all colour components and may offer 12, or even more, bits per component for smoother graduations of colour.

COMPRESSION ACROSS SPACE, THEN TIME

When you get to the digital video world there are essentially two kinds of compression systems. The first simply compresses each frame individually, without reference to the preceding and following frames, using a JPEG-style compression. Some examples of this are Motion JPEG, that still exists to this day, DV – the standard used for many years in consumer camcorders, and JPEG2000 which is used in the DCI format for cinematic exhibition. The DV system had some advantages compared to the formats now most in use. Principally it was relatively easy to edit because of the discrete frame structure. But at around 25Mbps for SD video, the compression is only to 20% of the original.

In the late 1980s the basis of the current systems was developed in the form of H.261, which is the ancestor, or at least an influential uncle, to not only the H.26x line of video codecs, but also Microsoft’s VC1.

To fully understand these involves mastering such arcane concepts as motion vectors, and discrete cosine transforms, well beyond the scope of this article. The important thing to understand is that with these compression systems the frames are no longer independent.

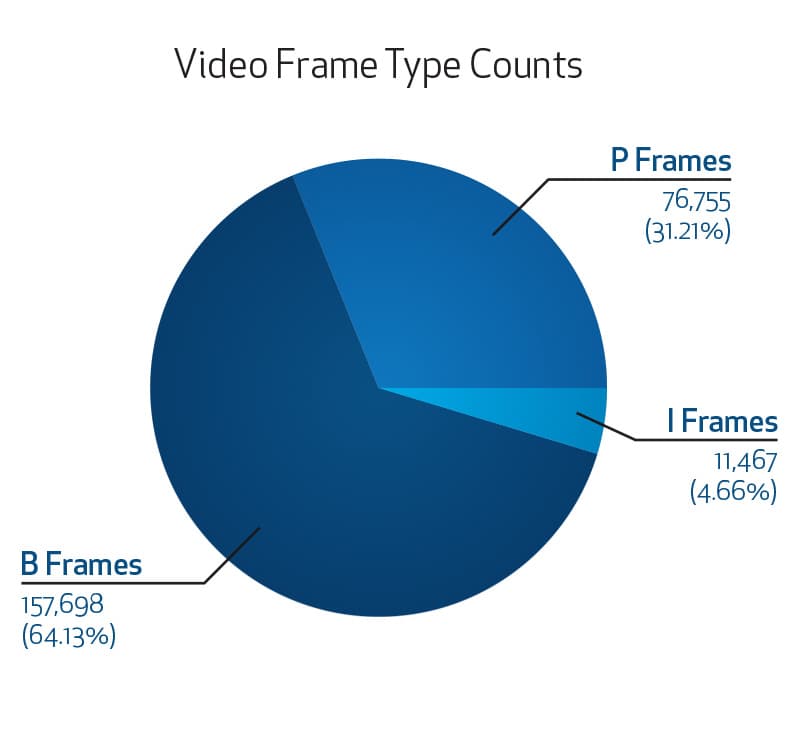

In addition to compressing individual frames, frames are compared to each other for further savings. It isn’t just a matter of looking for redundancies to eliminate, since it’s a rare piece of video programming that has a perfectly unchanging background. Instead the vector of the movement of objects (well, parts of objects as captured in macroblocks) is predicted for large space savings, with the inevitable errors corrected from an overlay of additional information. A small proportion of the frames are individually encoded (these are called Intra-Frames or I-Frames) while others show only adjustments from the preceding frame (Predicted-Frames or P-Frames) and others work in both directions (Bidirectional-Frames or B-Frames).

This has remained the essence of compressed video delivery through various iterations of the H.26x standards. MPEG2 (derived from H.262) was for many years the most widely used on DVD and DVB broadcast TV. For 1080p consumer formats, H.264 (sometimes called AVC for Advanced Video Coding) has become the de-facto standard. This lies at the core of most internet video as well as most Blu-ray discs (which also accommodate MPEG2 and VC1) and even some forms of digital TV (the 3D experiments of a few years ago in some Australian capital cities used H.264).

WAITING IN THE WINGS

Poised to take over is H.265 which was specifically developed to double compression rates without reducing picture quality, a virtuous ambition given it’s designed to support not just 4K, but also 8K (8192 by 4320 pixels) resolutions. H.265 takes advantage of today’s more powerful processing compared to the year of H.264’s release (2003). Encoding has often been a trade-off between what’s mathematically optimal, and what’s computationally feasible, given the need to decode in real time. H.265 tilts the balance towards the mathematically optimal.

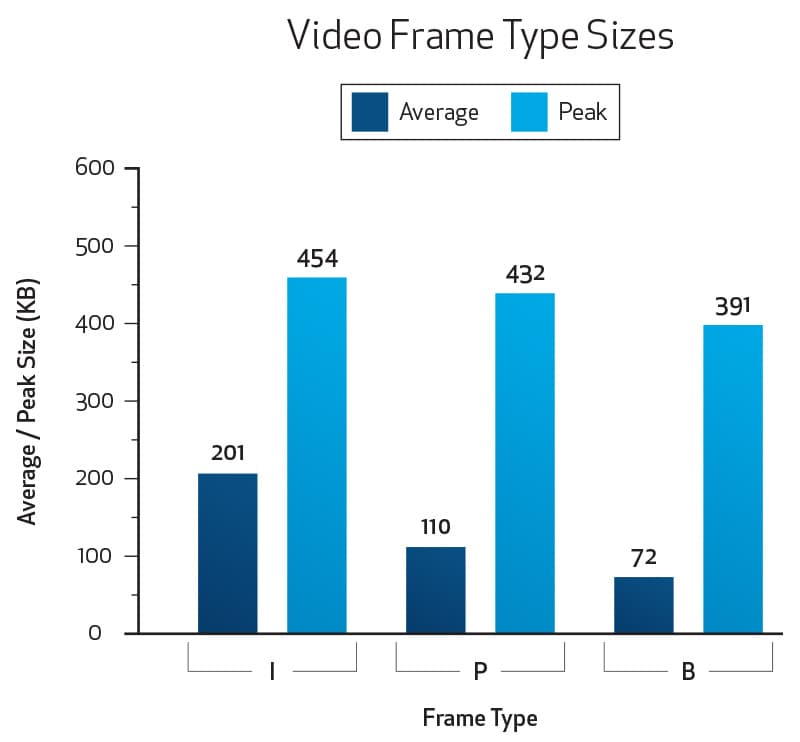

To give a sense of the data savings from these schemes let’s return briefly to Rusty’s sword and sandal epic, Gladiator. A single uncompressed Blu-ray-quality frame (ie. 12 bits per pixel) would come to 3037.5kB in size. Of the nearly quarter of a million frames on this movie’s Blu-ray disc, fewer than 5% are I-Frames, and these average only 201kB in size. Some 31% are P-Frames, and these save more space, averaging 110kB. B-Frames are the most common at 64%, and most space-saving at an average of 72kB each.

If H.265 implementations deliver what’s promised, then rather than the amount of data in content like Gladiator being reduced to less than 3% of the original, they’ll be below 1.5%.

CONTAINERS & THEIR CONTENTS

So far we’ve been talking about how video is encoded. But for most purposes video alone is of little use. In general, it needs to be accompanied at the very least by audio. Commonly it also needs sync data to keep the sound and video lined up, subtitles, possibly multiple other audio streams, metadata instructing the decoder or playing device to take particular actions, and sometimes other data only loosely-related to the actual video content (eg. the EPG and program information data in digital TV ‘transport streams’). This collection of content is generally delivered within a ‘container’ or ‘wrapper’.

Indeed, that’s also the case with digital audio, but things tend to be simpler there, with most codecs firmly associated with one standard wrapper.

With video, though, a particular wrapper may contain content in one of several different codecs, while any given codec may be found in several different wrappers.

For example, a wrapper very familiar to those who like to obtain movies and TV shows from the internet in a less than formal manner is Matroska [Aarrrr – He’s so polite – Ed]. The MKV file can contain video and multiple audio streams, all employing just about any codecs, along with subtitles. Your player may support MKV in general, but it may not necessarily support the weird video compression format contained therein.

Meanwhile MPEG-4, the reasonably popular video codec which was first specified in 1999, might appear in, amongst others, an MKV wrapper, or equally in an AVI one. Yet AVI was launched way back in 1992. Up until a couple of years ago some devices would play MPEG-4 when delivered in an AVI wrapper, but not in an MKV one, even though the MPEG-4 stream was effectively identical in both.

ASSUME NOTHING

Modern professional and consumer playback equipment is incredibly versatile, as it must be to even to begin to cope with the proliferation of video codecs, their containers, the other contents of those containers, and of course the various resolutions of the provided video (including, now 4K).

But it’s far from certain that any particular combination will work in any particular device, making it critical to test the entire system well before your deadline.

RESPONSES