The SOH Grand Master LAN

Putting all your datagrams in one basket.

Text:/ Cameron O’Neill

About a decade ago I was operating the audio and vision for the Australian Telecommunication User Group (ATUG) annual conferences. There was a repeating theme: ‘convergence’. Every year the network gurus promised that they could save corporations more and more money by combining all of the disparate networks into one Grand Master Network.

Their claims weren’t far off. In 1998 a new protocol was introduced to the world: Virtual Local Area Networks, or VLANs. Put simply, VLANs allowed high-end network switches to segregate network traffic into a number of virtual ‘Local’ networks.

Previously, this had been achieved by running separate cabling and switch infrastructure, but VLANs eliminated this requirement. Very quickly there was only one network running the print server, the email server, and the corporate website. All of this could be run over just a few cable connections, slashing the cost of corporate networking. Before long, all of the various corporate networks were ‘converged’ into one.

Fast forward a decade. Cobranet, the first successful networked audio system, is starting to drift into retirement, replaced by newcomers like Dante. Unlike Cobranet, contemporary protocols use networking-friendly transport methods, allowing them to ‘play nice’ with standard networking equipment. In theory, you should be able to run a protocol such as Dante alongside your daily emails, Voice over Internet Protocol (VoIP) telephones and business systems. But can you? And, more to the point, should you?

ASKING THE BIG QUESTIONS

In 2008 I was part of a team that looked at the first question – can you? At the time, we didn’t even consider the second question – should you? The project was simple; use the Dante networking capability of the Dolby Lake processors to distribute the audio around the Concert Hall at the Sydney Opera House. All up there was a main left/right hang of d&b J series cabinets, and eight delay hangs of Q and T series. We wanted the flexibility to route any number of signals to any of the individual hangs, and Dante gave us the opportunity to do that.

As part of the design I specified Cisco switches. They had the reliability and speed that this network required. Sure, they were a little more expensive than a lower-grade switch, but they had all of the extra features that we wanted, and we knew that they would just work. When you’re talking about a critical system, the extra cost is easily justified. So we contacted the IT department and added one of their network administrators to the team. Little did we know that we were about to start on a three-year adventure into convergence.

One of the appealing features of the Cisco switches was their ability to send a distress email whenever they ran into trouble. Not only was this a good feature in terms of knowing if the system was operational or not, but it also meant that we could gather data on what was, at the time, an unproved concept. But, in order to get that extra data out of the switches, we needed to connect them to the email network, and thus our converged network was born.

To the network administrator this was a walk in the park. The goal was to create a new VLAN for the Dante traffic, and then simply install switches into the auditorium. These were then connected through a single, high-bandwidth link to the rest of the corporate network.

We ran the system up on the test bench, and, to our surprise, everything just worked. Audio entered one box, bounced around the network, and came out where it was supposed to. Further integration tests proved just as successful. The network treated the audio just like any other data, and everything made it through on time. The network administrator even challenged me to try and make his system break a sweat. Even trying my hardest it was impossible to use more than 13% of the Cisco switch’s resources.

BRINGING THE QoS TO BEAR

One of the keys to our success was the use of Quality of Service, or QoS (pronounced kwos). This is another nifty feature of modern networks that lets you specify the relative importance of different types of data. More important data is put at the top of the transmission queue and sent first. Traditionally this has been used for systems such as VoIP telephony, because a live telephone call has a higher QoS ranking than an email. But with a bit of help from our network administrator we were able to use QoS to make sure the audio data was sent first.

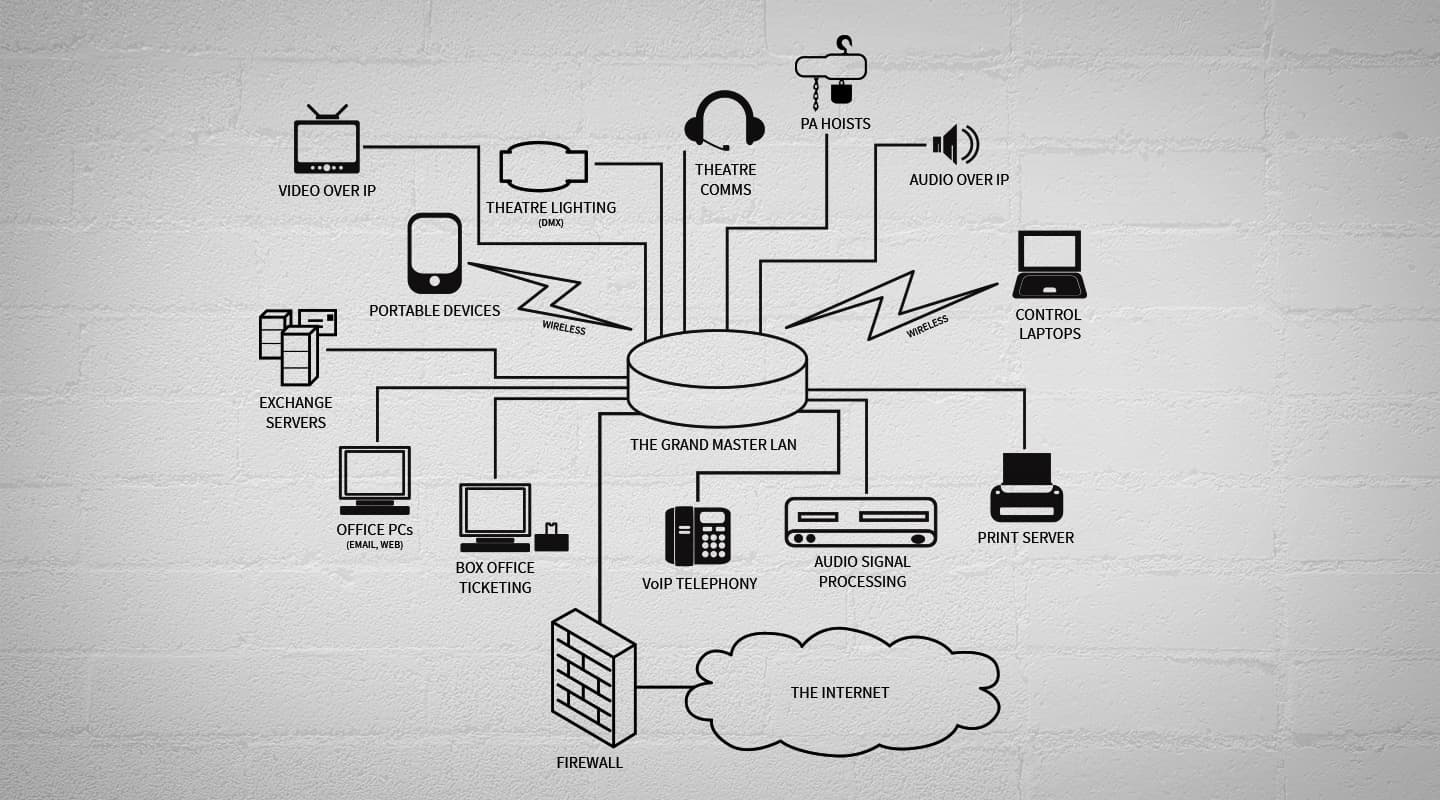

The network continued to grow from those first steps. Before long, the Production side of the network was almost as big as the Corporate side. The number of production systems increased to include communications, motor control, DMX-over-Ethernet and AMX control; all running seamlessly on the same infrastructure as the website, the ticketing system, the corporate email, the building control system and the telephones. Not only was all of this available on a cabled connection, a few wireless access points allowed access to any of these systems from practically anywhere in the building. Our corporate laptops changed from an overpriced email reader to a full-function theatre control device.

“”

Little did we know that we were about to start on a three-year adventure into convergence.

ANSWERING THE BIG QUESTION – TOO LATE

It was only at this point that the question of whether or not we should converge the network or not started to make sense. Take, for example, a standard SOH Forecourt event. At an event like this, you’re going to have a production manager. They’re going to want their computer connected so that they can check their emails and print plans, and a telephone to order more of everything. They’ll want to speak to their crews, and lower the amount of time that they spend running cable. Lighting crews will want to put DMX drops throughout the space, and the audio crew will want to control their speaker management system as they wander the audience area. The box office staff will want to be able to print tickets and capture customer information, and the security team will want to monitor the crowds from their temporary control room. The OB truck will want telephones to mission control, and the talent will want to Tweet about everything that is going on around them. And all of that comes down a single fibre pair running back into the building. Total setup time: about three hours.

Of course, this isn’t for the faint of heart. All told, the network took three years to set up, and there were some casualties. Due to software stability problems with the Lake Processors, the original Dante network that started the revolution has since been switched off; replaced with a network of AES audio feeding a smaller number of Lake Processors. Despite such minor setbacks, the network has lived on, and the benefits we were promised a decade ago by ATUG are still felt today.

A close working relationship was created and maintained between the Theatre Engineering and Information Systems teams, without which the whole network would have failed. But, once the investment in time and effort was complete, we were left with a solid, future-proof, and totally converged network.

RESPONSES